Zhang Dan's research group from the Department of Psychology at Tsinghua University has published a paper titled "EEG-based speaker-listener neural coupling reflects speech-selective attentional mechanisms beyond the speech stimulus" in the international neuroscience journal Cerebral Cortex. This paper compares the similarities and differences between speech-neural activity coupling and speaker-listener neural activity coupling, explaining the unique "beyond-stimulus" role of inter-brain neural coupling in speech information transfer.

In a noisy, bustling cocktail party, people can always follow their desired conversation target amidst the clinking of glasses, while ignoring other sounds. In this process, selective attention plays a crucial role (Cherry, 1953). During this process, do listeners merely pay attention to the sound emitted by the speaker and the literal meaning of the information (i.e., the stimulus itself), or do they further form a shared mental space with the attended object? This has continuously sparked discussions among researchers from different fields (Gadamer 1975; Searle 1980; Hartley & Poeppel, 2020). In neuroscience research, relevant empirical evidence has been relatively scarce.

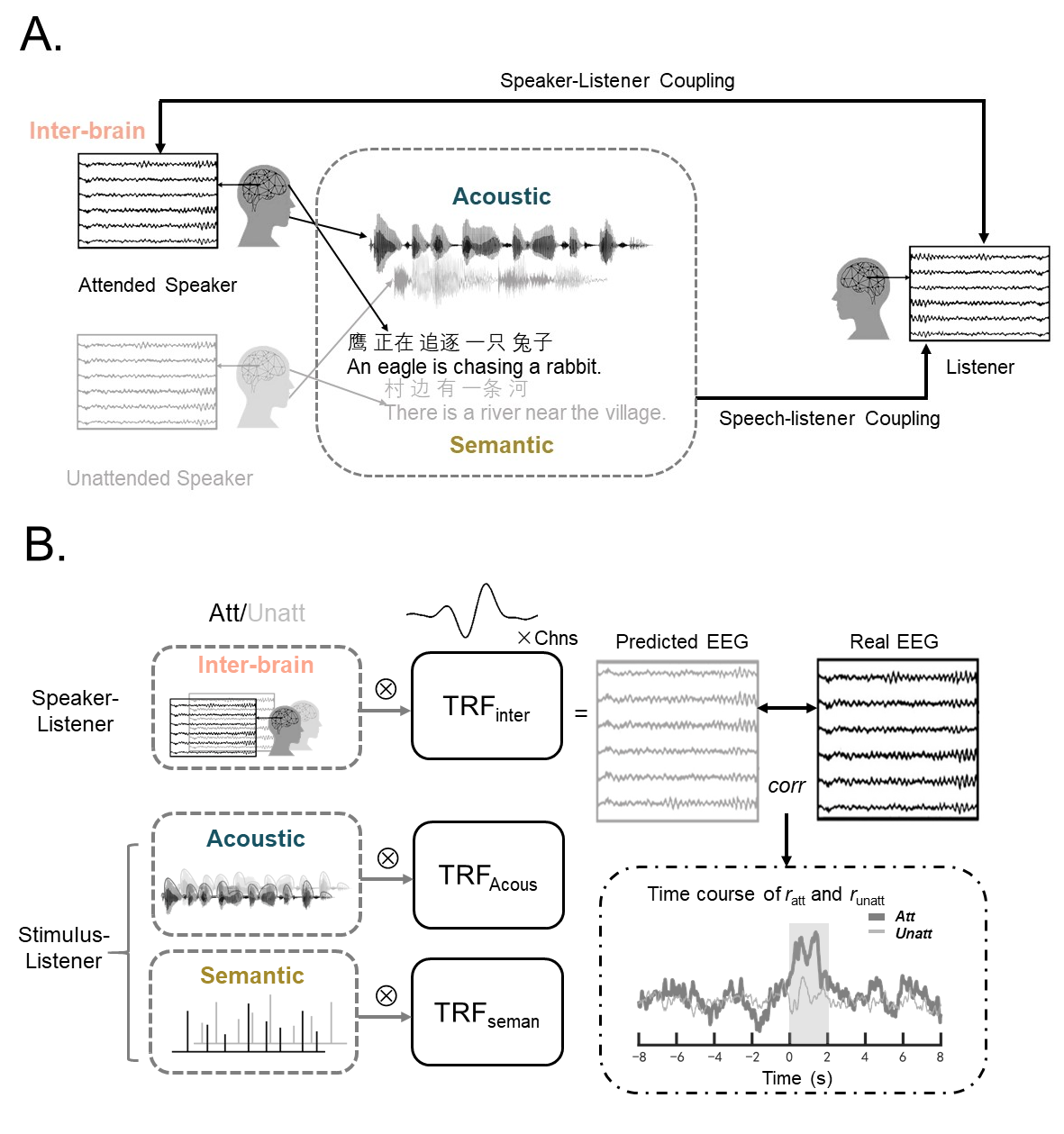

Figure 1: Experimental Paradigm and Analysis Methods

A) Illustration of speaker-listener neural coupling and speech stimulus-listener neural coupling;

B) Representation and computational models (Temporal Response Function, TRF) for acoustic, semantic, and inter-brain features

This study used natural, vivid, continuous speech information as stimulus material, expanded on the representation of speech information in previous studies, and innovatively distinguished between two different information processing modes: speaker-listener neural coupling and speech stimulus-listener neural coupling (as shown in Figure 1A). At the same time, this study adopted the temporal response function modeling method to model three types of features (acoustic, semantic, inter-brain) under two kinds of coupling (speaker-listener vs. speech information-listener) within the same framework, used to compare neural activity response differences between attended and unattended features (as shown in Figure 1B).

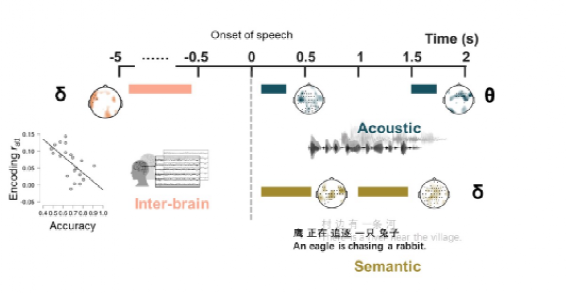

Figure 2: Different Attentional Effects of Speaker-Listener Neural Coupling and Speech Information-Listener Neural Coupling

The core finding of this study is that speaker-listener neural coupling and speech information-listener neural coupling have different neural response patterns (as shown in Figure 2). The attentional effect of speaker-listener neural coupling appeared 5 seconds before the sound stimulus onset, in the left prefrontal area, and in the delta frequency band. At the same time, this neural activity was negatively correlated with the listener's comprehension performance and positively correlated with self-reported task difficulty. In contrast, speech information-listener neural coupling only appeared after sound stimulus onset, with the attentional effect of acoustic features appearing in the theta band at 200-350 milliseconds, while the attentional effect of semantic features appeared in the delta band at 200-600 milliseconds, both in the central region. Speaker-listener neural coupling and speech information-listener neural coupling differed in temporal, frequency domain, and spatial distribution, and only speaker-listener neural coupling correlated with behavioral results. These results highlight the uniqueness of speaker-listener neural coupling that "goes beyond the stimulus," indicating that in everyday verbal communication, people not only passively receive information sent by others but also predictively and actively form consensus with communication partners.

The first author of this study is Li Jiawei, a doctoral graduate from our department (currently a Humboldt Scholar conducting research at the Free University of Berlin), and the corresponding author is Associate Professor Zhang Dan. Collaborators in this study include Professor Hong Bo from the Department of Biomedical Engineering at Tsinghua University School of Medicine, Professor Andreas Engel from the University Medical Center Hamburg-Eppendorf, and Dr. Guido Nolte.

This research was supported by the National Natural Science Foundation of China (NSFC) and the German Research Foundation (DFG) major international cooperation project "Cross-Modal Learning: Adaptivity, Prediction and Interaction" (NSFC 62061136001/DFG TRR-169/C1, B1), the National Natural Science Foundation of China's general project "Research on Key Cognitive Neural Indicators of Classroom Teaching Effectiveness Based on Group Brain Scanning" (61977041), and the Alexander von Humboldt Foundation Postdoctoral Fellowship.

Paper Information:

Li, J., Hong, B., Nolte, G., Engel, A.K., Zhang, D. (2023). EEG-based speaker-listener neural coupling reflects speech-selective attentional mechanisms beyond the speech stimulus. Cerebral Cortex, DOI: 10.1093/cercor/bhad347.

Paper Link:

https://doi.org/10.1093/cercor/bhad347

References:

Cherry EC. 1953. Some experiments on the recognition of speech, with one and with two ears. J Acoust Soc Am. 25:975–979.

Gadamer HG. 1975. Truth and Method, A Continuum book. Seabury Press.

Hartley CA, Poeppel D. 2020. Beyond the Stimulus: A Neurohumanities Approach to Language, Music, and Emotion. Neuron. 108:597–599.

Searle JR. 1980. Minds, brains, and programs, the MIT press.

Skinner BF. 1957. Verbal behavior. Appleton-Century-Crofts.